Paper: Understanding and Effectively Mitigating Code Review Anxiety

It's been a hot minute since I last wrote up notes, as I've been doing other things, but recently a coworker shared this paper from Carol S. Lee and Catherine M. Hicks titled Understanding and Effectively Mitigating Code Review Anxiety. To make a long story short, they developed a framework and intervention around code review anxiety based on research about social anxiety, ran a study validating it, and created a model of key metrics that influence code review avoidance that was also shown to be helped by a single workshop intervention.

That sounds like a lot of stuff, so I'll have to break it down a bunch.

First of all, the authors cover why code reviews are important: defect finding, learning and knowledge transfers, creative problem solving, and community building. However these can happen only if participation is active, timely, and accurate.

Enter Code Review Anxiety (CRA), a concept referring to the fear of judgment, criticism and negative reviews when giving or receiving code reviews. Code Review Anxiety is not an empirical concept, but it's been acknowledged by the industry.

The authors draw from social anxiety to better define it:

social anxiety is maintained and exacerbated by negative feedback loops that reinforce biased thinking and avoidance in social or performance situations. In particular, individuals experiencing social anxiety are less likely to believe that they have the skills to manage their anxiety (low anxiety self-efficacy) and are more likely to overestimate the cost and probability of situations ending poorly (high cost and probability bias)-all of which contribute to greater avoidance behaviors such as procrastinating, mentally “checking out,” or prematurely leaving social and evaluative situations.

In the context of code review anxiety, developers experiencing code review anxiety may similarly experience low anxiety self-efficacy, high cost and probability biases, and high avoidance. For example, they may believe that they are unable to manage their code review anxiety (low anxiety self-efficacy), believe that they are likely to break production (probability bias), and believe that this will be the end of their career as an engineer (cost bias), all of which increases their code review anxiety. Developers may subsequently procrastinate on code reviews and limit their cognitive engagement and receptiveness to feedback (e.g. by “rubber stamping” or skimming through feedback quickly instead of thinking about how they can learn from the feedback) as they “check out” to reduce their anxiety in the moment (avoidance)

This bit introduces many of the key concepts in the paper, which participants in the study would rate themselves on:

- Probability Bias (PB): likelihood of something negative happening during a review

- Cost Bias (CB): how bad it would be for something negative to happen

- Anxiety Self-Efficacy (ASE): their perceived ability to cope with anxiety

- Code Review Avoidance (CRA): how much a participant avoided code reviews during the last week

A few extra ones included are:

- Subjective Units of Distress Scale (SUDS): how high participants rated their anxiety and distress when thinking about giving or receiving a code review

- Self-Compassion (S-SCS): I believe this is how much people show kindness for themselves against self-judgment, how they can see failure as normal rather than isolating, and a mindful approach to negative emotions.

- Behavioral Action Rating (BA): the likelihood of practicing the skills taught in the workshop

These concepts will turn out to be the main measures for outcomes and the components in their model of the code review anxiety phenomena, which I'll come back to soon. Their study was run by contacting people for it, filtering those who rated high enough on the Subjective Units of Distress Scale, and then split them in two groups.

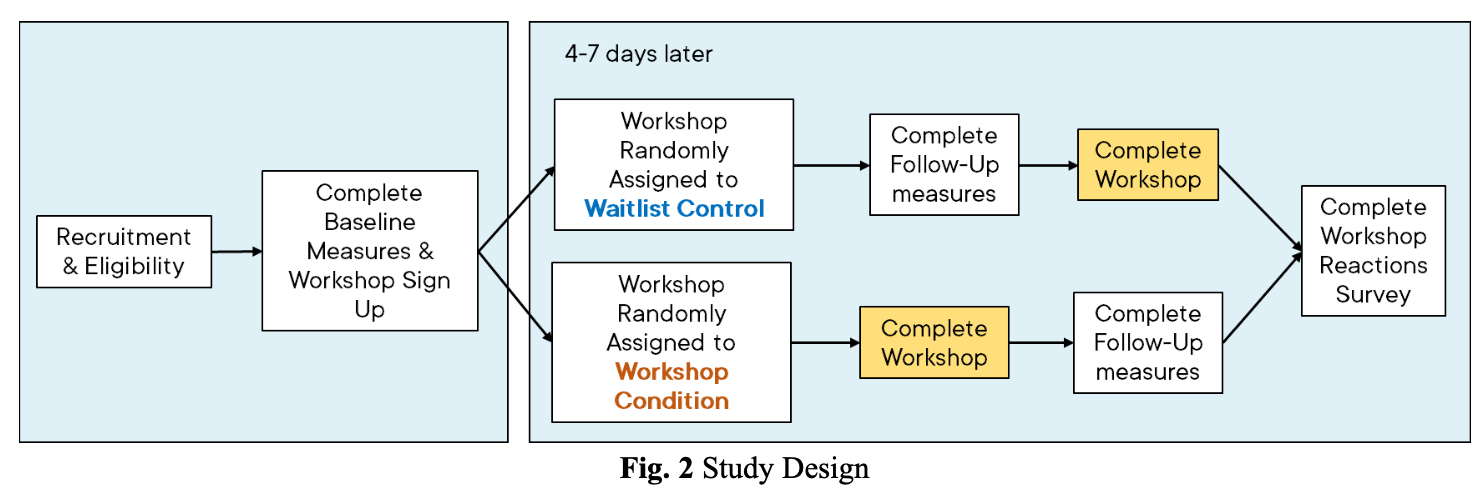

One group would complete their self-assessment, wait 4 to 7 days, have them do a second self-assessment, give them a workshop for code review anxiety, and then gather reactions. The other group would do the self-assessment, wait 4-7 days, receive the workshop, complete a second self-assessment, and then gather reactions:

I'm not quite sure what the delays were between the workshops and follow-ups, and whether that matters for the results—if their point is to measure workshop efficacy against a baseline rather than how much of a long-term effect it has, then it's all fine. As far as I can tell, the point is to establish the anxiety model and show that there is potential in borrowing methods from social anxiety (rather than "curing" it permanently) so this all seems coherent to my untrained eye.

So what was the workshop? Carol S. Lee (the paper's first author) ran a 2h session with methods based on Cognitive-Behavioral Group Therapy and Dialectic Behavior Therapy. She's a clinical psychologist with experience intervening with clinical populations:

We deliberately chose content to directly target all three key processes of cognitive-behavioral approaches. For example, to increase awareness of internal experiences, the workshop began with psychoeducation on the prevalence, symptoms, and function of code review anxiety, followed by a self-monitoring and functional analysis exercise of anxiety symptoms. Participants also learned and practiced the “TIPP (changing body temperature, intense exercise, paced breathing, and progressive muscle relaxation)” relaxation techniques.

To reduce biased thinking and increase constructive and compassionate thinking, participants then learned and practiced identifying and restructuring negative automatic thoughts - negatively biased thoughts about ourselves and a situation’s outcomes that involuntarily occur. For example, a developer with the thought “if it doesn’t work as expected, they’ll think I’m stupid” might practice restructuring the thought into “things don’t work as expected all the time. It doesn’t mean someone is stupid,” by asking themselves questions like “Can I read minds? Do I know that they’ll think I’m stupid? Does something not working mean that someone is stupid? Am I putting unrealistic expectations on myself that I wouldn’t put on others?”

Finally, to reduce avoidance, participants learned adaptations of DBT’s “DEAR MAN” and “GIVE FAST” skills, which teach methods of effective and active engagement during interpersonal situations, such as gently providing and asking for validation and specific feedback. Participants were also asked to practice all of the skills learned in the workshop while actively engaging in their next code review.

For reference, DEAR MAN and GIVE FAST are acronyms around objective, relationship, and self-respect effectiveness. They also provide a really interesting rationale for their approach, where they chose to:

[...] prioritize self-compassion and anxiety self-efficacy over the probability and cost biases [...] to create an adaptive intervention applicable to a wide range of software team contexts and to increase the cultural sensitivity of our intervention. For example, unlike panic or specific phobias, where feared negative outcomes are highly unlikely to occur (e.g. dying or having a heart attack), feared social or performance anxiety-related outcomes can occur and are often frequently experienced (e.g. making a mistake). Because of this, targeting a negative automatic thought like “I will make mistake” by decreasing probability bias (“I probably won’t make a mistake”) can be contextually unhelpful, whereas targeting the thought by increasing self-compassion (“it’s okay if I make a mistake because mistakes are a part of learning and everyone makes them”) can be more broadly functional.

[...] decreasing cost bias (“it won’t be that bad”) can create avoidance and be invalidating, whereas targeting self-compassion and self-efficacy (“It’s them being sexist, it doesn’t mean there’s something actually wrong with me. It’s okay to feel hurt and I can do something to take care of myself.”) can be validating and empowering.

They then did a bunch of statistical analysis I'm going to skip over, and instead jump to what their experiment revealed in terms of a model:

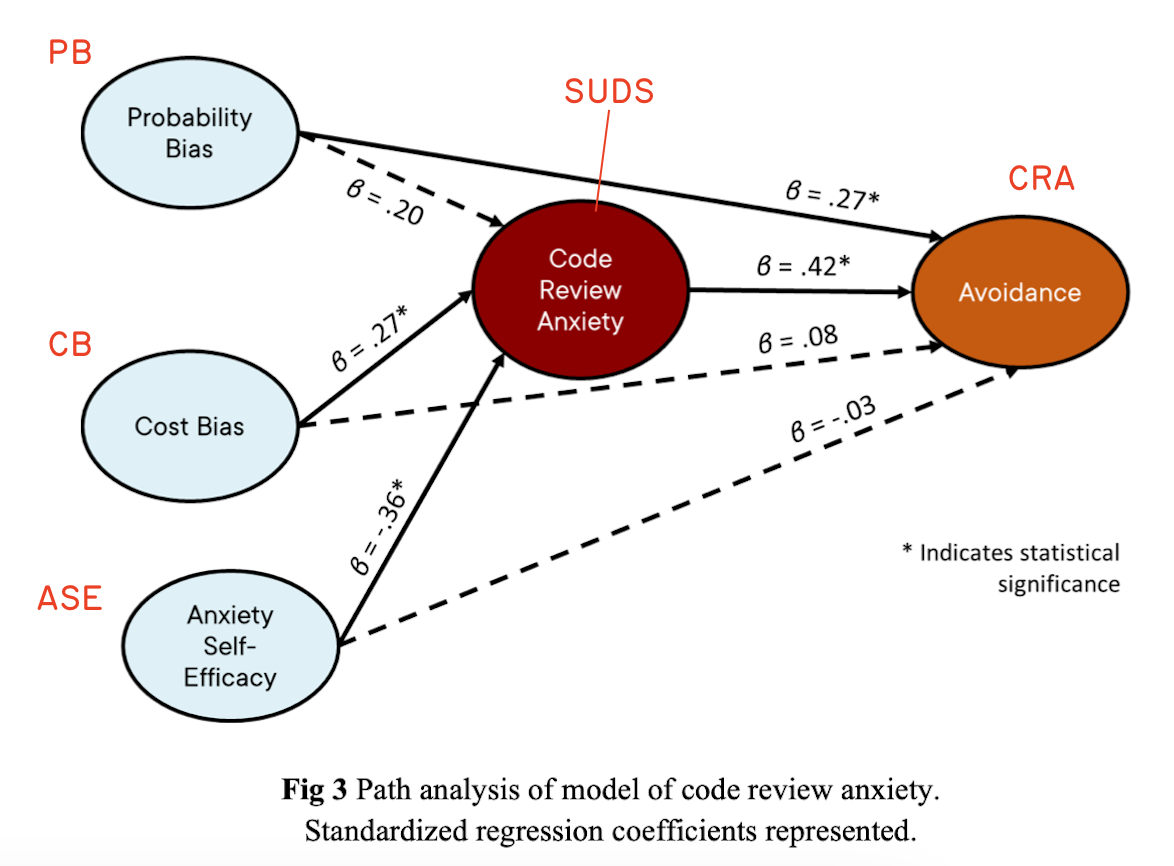

I've added the red labels with each acronym they used for metrics. But long story short, Cost Bias and Anxiety Self-Efficacy impacted Code Review Anxiety the most. The amount of anxiety felt, along with the perceived Probability Bias, were defining factors of how much code review avoidance the participants would make. Put together more clearly in a later figure, this is what they define:

They mention that the code review anxiety was a bigger contributor to avoidance than probability bias; the more severe they thought the consequences would be while thinking they were unable to manage their anxiety (this latter one being most impactful), the stronger avoidance was. By looking at the second assessments, they were also able to show that their workshop had the most impact specifically on the self-efficacy scores, with some effect on their self-compassion. The workshop had no significant effect on the probability or cost scores. Overall, the SUDS score (how anxious people rated themselves to be) was significantly improved, with a moderate effect.

To make this simple, that means that workshop participants were more forgiving of themselves and trusting in their ability to manage their own anxiety—this was the strongest effect—and this in turn reduced their anxiety as well, all after a single session.

Another interesting thing here is that they report demographic and firmographic data, which shows that code review anxiety is not associated to either experience (like being a junior dev) nor related to gender; any developer may feel anxiety here. They point out this counters common myths currently circulating in the industry.

They also point out a few limitations: the participants self-selected, which means they were interested in taking action about their anxiety (this isn't a random sample); there was no double-blind experiment (the facilitator could have inadvertently influenced results), and the main author being a clinical psychologist means it's not clear how well this could work with workshops administered by non-experts.

The authors conclude:

Finally, our research provides evidence that a single-session cognitive-behavioral workshop intervention can effectively reduce code review anxiety by significantly increasing anxiety self-efficacy and self-compassion. As this is a notably cost-effective protocol relative to the value and impact of code review activities throughout a developer’s career, this finding is an optimistic and important signal for the compounding benefit of empirically-justified interventions to create a more human-centered and healthier developer experience within technology companies.

As is usual for such papers, more research is required to show how generalizable this could be, but I still found the model to be really interesting.